Once upon a time, seeing was believing. A photo, a video, a recording; these were the receipts. Evidence. Proof. If you wanted to convince someone that Elvis was alive, you’d better have a blurry Polaroid to back it up.

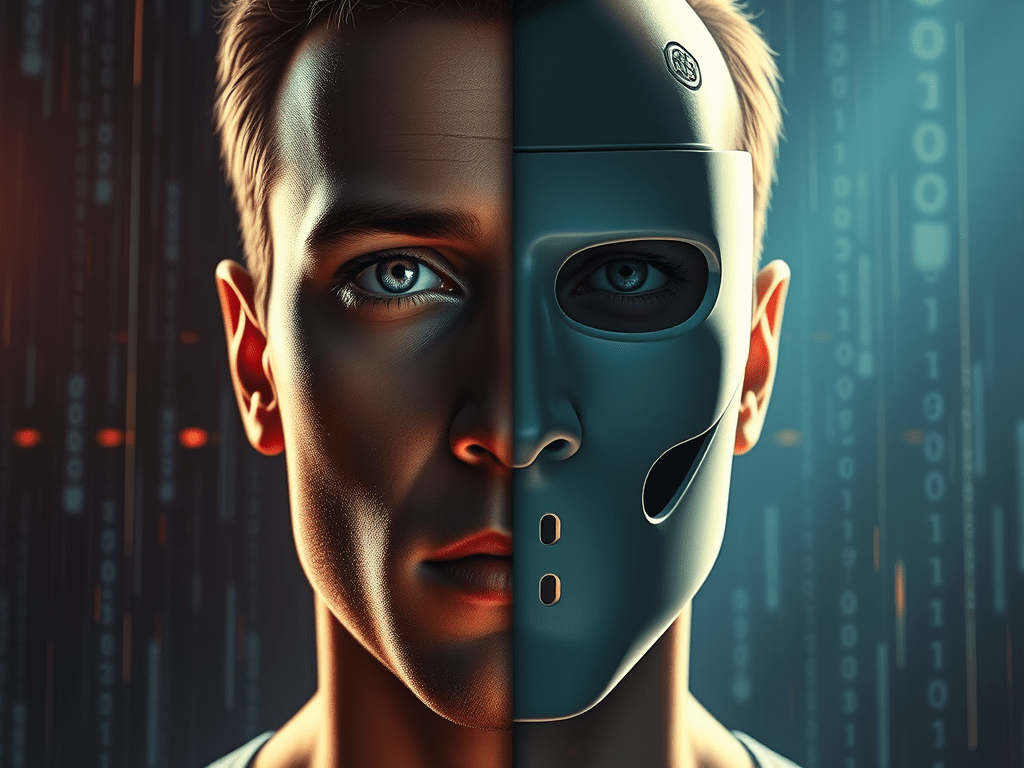

But now? A video doesn’t just lie, it lies convincingly. Deepfakes have made sure of that.

Deepfakes are AI-generated media that can realistically swap faces, mimic voices, and stitch together “proof” that never happened. They’re powered by machine learning models called generative adversarial networks (GANs), basically one AI playing dress-up while another AI critiques it until the illusion is nearly perfect. The result? A reality so fragile that trust becomes optional.

Let’s pause here: what happens to society when truth itself is editable?

On the funny side, sure, we’ve all seen Nicolas Cage’s face on every possible character in cinema history. That’s harmless (mostly). But then there’s the darker side: political disinformation, synthetic revenge porn (which Amnesty International has called a human rights crisis), and the erosion of trust in journalism. In 2019, Facebook, Microsoft, and multiple universities launched the Deepfake Detection Challenge, a multimillion-dollar project to fight this exact problem Because if you can’t trust what you see, what can you trust?

And here’s the real kicker: even real evidence now comes with a question mark. Someone caught on camera doing something? They can just shrug and say, “That’s a deepfake.” Reality becomes negotiable. Welcome to the era of plausible deniability, where nothing sticks and accountability slips right through our fingers.

So where does that leave us? Do we ban the tech? Regulate it? (The EU is already trying with its AI Act.) Or do we accept that truth, like fashion, now comes in seasons; subject to remix, revision, and maybe even resale?

The ethics of deepfakes isn’t just about what’s possible. It’s about what’s believable. And once that line blurs, the question becomes: who controls the narrative?

If you like wandering down these messy tech rabbit holes with me, subscribe for more musings from your friendly dev girl who still has trust issues… with both code and reality.

Leave a Reply to tlovertonet Cancel reply